What Is an AI Agent? The Future of Finance Explained

June 18, 2025

In April, Norway’s $1.8 trillion sovereign wealth fund announced plans to save $400 million annually by integrating AI into its trading operations. That statement sends a clear message to the financial community: AI agents are the strategy of the future.

But what exactly is an AI agent? And how does it differ from a simple chatbot? This article breaks down how AI agents think, act, and evolve, revealing why traders, brokers, and investors can no longer afford to ignore them.

Key Takeaways:

- An AI agent is an autonomous software that senses, reasons, and acts without waiting for constant user input.

- In finance, agents improve speed, accuracy, and 24/7 responsiveness across trading and compliance. Success requires clear goals, structured toolchains, sandbox testing, and integration with real-world APIs.

- Without oversight, agents can hallucinate, breach compliance, or expose data—governance must be embedded.

- Start with agent-assist use cases, measure performance, and scale only after passing security and audit checks.

What is an AI Agent?

From an engineering standpoint, an AI agent is autonomous software that observes live data, reasons about it, and executes actions aligned with a user-set goal, without constant human prompting.

Autonomy distinguishes agents from rule-based bots: they maintain internal memory, craft multi-step plans, learn from outcomes, and invoke external tools, such as trading APIs or custodial gateways, whenever those tools advance the plan, closing the loop between insight and execution.

Essential Capabilities

Most modern frameworks model an agent around five core capabilities: perception, reasoning, planning, memory, and learning—each powered by large language models (LLMs) or specialized neural networks and orchestrated through a loop of think → decide → act → reflect.

- Perception – ingest market, news, or chain data

- Reasoning – select strategies under uncertainty

- Planning – break goals into executable steps

- Memory – store and retrieve context over time

- Learning – refine behavior from outcomes

Computer science literature traditionally describes five increasing levels of sophistication: simple reflex agents, model-based reflex agents, goal-based agents, utility-based agents, and learning agents. Trading analogs range from static arbitrage scripts to agents that self-tune portfolios in real time.

Generative AI and the New Agent Stack

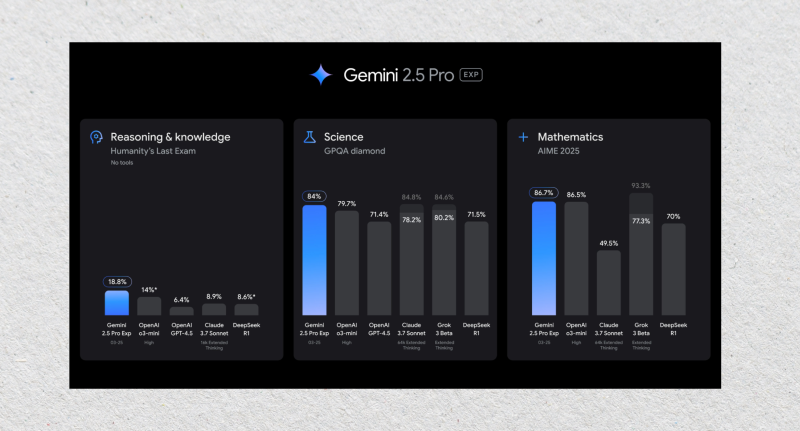

Generative models have raised the ceiling. Google Gemini 2.5 Pro offers a two-million-token context window, letting agents cache months of trade chat inside one request, while open-source orchestrators such as LangGraph or CrewAI coordinate specialist sub-agents that fetch data, draft rationales, and execute orders.

Taken together, these components turn an AI agent from a glorified chatbot into a decision-making co-pilot that sees, thinks, and acts across the entire trade lifecycle—a capability the next section will contrast directly with traditional chatbots.

AI Agent vs Chatbot vs Assistant

At first glance, an AI agent vs chatbot debate may look semantic; both speak natural language. The distinction is autonomy: chatbots wait for prompts, whereas agents proactively pursue goals, monitor data, and trigger tools the moment pre-agreed conditions surface.

Memory depth is another divider. A chatbot usually retains only the active conversation. An agent checkpoints every interaction, builds long-term embeddings, and consults that history when planning multi-step tasks such as laddered crypto orders across several exchanges.

Here’s a quick comparison:

- Trigger — chatbot reactive | agent proactive

- Tool scope — chatbot text-only | agent APIs, ledgers, OMS

- Memory — chatbot short-lived | agent long-term store

- Autonomy — chatbot supervised | agent self-driving with optional oversight

Microsoft’s Copilot remains a chatbot; it drafts a trade report but cannot place the trade. By contrast, Crypto.com’s AI Agent SDK wraps the same LLM layer with wallet functions, allowing a single message to both articulate the thesis and execute it.

For risk desks, the distinction matters: regulators treat any system that can act on markets as algorithmic trading, demanding testing and circuit breakers even when a human approves each trade. Chatbots, being read-only, rarely trigger such oversight.

How AI Agents Work in Capital Markets

So, how does an agent actually operate within the fast-paced financial ecosystem?

Market Data Ingestion & Perception

Financial markets bombard an AI agent with millisecond-level noise: price ticks, quote depth, on-chain events, and corporate releases. The first step—perception—is to funnel those feeds into a unified stream the agent can query like a single, high-speed database.

Most trading desks pre-clean data with schema validators and outlier filters, then dispatch it to feature stores refreshed every few seconds. This guarantees the reasoning layer never stalls on missing values, which is critical when slippage grows exponentially beyond a five-second lag.

Reason-Act Loops for Trading

Inside the reasoning loop, modern stacks rely on the ReAct pattern: the agent thinks, selects a function—say, best-venue routing—executes it, and then reflects on the outcome before the next cycle, tightening decisions with each iteration.

More advanced workflows adopt ReWOO, inserting a lightweight world model that simulates market impact ahead of live orders. The preview lets the AI agent discard paths likely to widen spreads or trigger self-matching restrictions before committing capital.

Crypto AI Trading Agents

In retail crypto, AI agent crypto products, such as Dash2Trade, bundle these loops into turnkey dashboards. Their bots mine sentiment, rank volatility bursts, and fire grid or DCA strategies automatically while traders watch risk meters rather than raw depth charts.

Cloud-hosted platforms like Cryptohopper extend the model, running agents 24/7 across multiple exchanges via secure API keys and back-testing each tweak against years of tick data. The pitch: Sleep while your agent harvests micro-moves that appear at 03:00 UTC.

Risk & Compliance Hooks

Yet autonomy demands oversight. Under MiFID II, any system that can place orders must embed circuit breakers and replayable audit logs. Seasoned desks, therefore, run agents in shadow mode first, measuring latency, fill quality, and compliance exceptions before granting real cash.

Once thresholds are met, dashboards switch to AI agent assist mode—surfacing recommended trades with one-click approval—before finally unlocking full automation. This phased path balances innovation with fiduciary duty and keeps regulators comfortable while the upside compounds.

Building an AI Agent: From Idea to Deployment

Turning the concept of an AI agent into a production-grade system involves clarifying objectives, assembling the right tool chain, and enforcing disciplined testing. The roadmap below walks from whiteboard sketch to a fully regulated, live-trading stack.

- Goal Setting & Success Metrics

Start with a single, measurable purpose. A crypto desk might aim to shave two basis points from average execution cost; a hedge fund may target a Sharpe ratio above 1.8 while capping daily drawdown at one percent.

Translate that narrative goal into machine-readable key performance indicators—fill-price slippage, latency, value at risk, and model confidence. The agent will optimize these metrics automatically, so clarity now prevents later drift toward unintended behaviors.

- Frameworks & Tool-chains

Open-source frameworks give builders leverage. LangGraph offers low-level state machines that keep long-running agents deterministic, while crewAI supplies a lightweight multi-agent layer independent of LangChain. AutoGPT prototypes ideas fast but demands strict tool-permission gating.

On the commercial side, LangChain’s hosted platform, container-native LLM runtimes, and feature stores manage scaling and observability. Teams can deploy separate reasoning, memory, and execution services, then attach cloud pub-sub queues to absorb intra-agent chatter at peak volatility.

- No-Code / Low-Code Builders

For teams without full-time engineers, an AI agent builder abstracts most plumbing. Postman AI Agent Builder lets analysts design workflows visually, bind them to API calls, and test prompts in one canvas before exporting a runnable container.

Google’s Vertex AI Agent Builder targets enterprises. It wires multi-agent coordination, vector search, and policy enforcement into a managed service, freeing compliance staff to focus on audit controls. Vendors like Markovate, an AI agent development company, offer turnkey builds on this stack.

- Integration with Order-Management & Custody

Whatever the toolkit, agents must speak the language of finance. Developers wrap FIX, REST, or WebSocket adapters so the execution module hits the same order-management system as human traders, then pipe fills to custody APIs that enforce multi-sig release and role-based withdrawal limits.

- Testing & Evaluation Loops

Before touching money, agents run in a sandbox, replaying historical ticks at accelerated speed. Engineers log every decision, compare fills against benchmark TWAP curves, and flag anomalies. Open-source back-testing suites supply reproducible notebooks and plug-ins for Monte Carlo stress tests.

Once results match or beat the control strategy, desks flip to live shadow mode: the agent trades a dummy account in parallel with humans, delivering side-by-side P&L. Only after ninety days of stability does the supervisory board unlock full capital.

Agent Assist: Human-in-the-Loop & Augmented Decision-Making

Even the most sophisticated trading agent is fallible. Regulators and risk managers insist on meaningful human oversight, making a human-in-the-loop architecture a baseline rather than a luxury for any system that can move money or process personal data in highly regulated venues.

In practice, AI agent assist means the software pauses at policy checkpoints, pushes a proposed trade to a dashboard, and waits for a click, typed reason code, or risk-off override—usually under ten seconds—even during peak volatility—before continuing its loop.

Risk officers see color-coded alerts detailing slippage estimates, open exposures, and AML flags; a single approval grants the agent a signed JSON token that authorizes exactly one action, keeping audit trails crisp, latency budgets tight, and repudiation impossible.

Auth0’s reference pattern shows how asynchronous authorization flows, such as CIBA, let supervisors approve high-value orders from a phone push while the agent waits in a suspended state, guaranteeing human veto power without blocking sub-second micro-trades.

Article 14 of the EU AI Act will codify that safeguard, mandating demonstrable oversight controls, operator training, and clear responsibility assignment, so agent builders should wire oversight logic from day one rather than retrofit under pressure across all agent tiers and beyond.

How to Select an AI Agent Development Company

Firms that lack ML benches often outsource; yet choosing the right AI agent development company can spare months of rework and regulatory heartache, because poorly designed code triggers false fills, compliance breaches, and opaque intellectual property ownership.

- Frame the mission. Document your trading goal, latency budget, and compliance envelope, then publish target KPIs—slippage, VaR, uptime—so every bidder knows the scoreboard before writing code or pitching grand visions.

- Short-list domain experts. Filter providers that already run live agents in FX or crypto; ask for verifiable references and check reviews such as Markovate’s blockchain case studies to confirm capital-market depth, not generic chatbot experience.

- Demand security evidence. Require recent SOC 2 or ISO 27001 certificates and a written key-management policy. These artifacts are faster to verify than glossy slide decks and prove the vendor’s controls have passed independent attestation.

- Inspect the tool chain. Confirm the stack—LangGraph, CrewAI, or Vertex—matches your architecture. Ask how they monitor prompt drift, store vector memories, and replay every decision for audit. A mismatch here causes expensive rewrites later.

- Run a shadow pilot. Fund a 90-day proof-of-concept that trades a dummy account in parallel with humans. Compare fills and risk metrics; release real capital only when the agent beats the benchmark and triggers zero compliance exceptions.

- Lock governance clauses. Bake quarterly model-risk reviews, penetration tests, and human-oversight checkpoints into the contract so the partnership adapts as regulations and market structures evolve.

Security, Ethics & Governance

Even a profitable AI agent can become a liability if hallucinations, feedback loops, or data leaks slip past controls. Millions may move before a human notices, so governance must live inside the code, not in a dusty policy binder.

Regulators increasingly codify that stance. MiFID II obliges trading venues to embed circuit breakers for all algorithmic flows, while the EU AI Act demands provable human oversight proportional to system risk, with logs retrievable on demand for every decision cycle.

Mitigation starts with layered safeguards: rate-limit external calls, sandbox tool invocations, inject rule-based sentinels that veto outlier orders, and stream every prompt, action, and result into an immutable audit ledger for post-mortems or real-time kill-switch triggers.

Finally, security is a living process. Schedule quarterly stress tests, rotate secrets behind hardware security modules, and review model outputs for new bias or drift. Treat the agent like a junior trader—train, supervise, and certify—because regulators will hold you equally accountable for its actions.

What’s for the Space? Multi-Agent Systems & Agentic RAG

The next evolution of the AI agent stack is collaboration. Instead of one generalist agent solving every task, teams deploy multiple specialists—each trained for a narrow skill—and route tasks between them in the real time. This is the principle behind multi-agent systems.

One agent monitors news sentiment; another models volatility; a third executes hedges. Orchestrators like LangGraph handle dependencies, role hand-offs, and fault tolerance. The result: faster decisions, greater transparency, and resilience against failure or model drift.

In parallel, agentic RAG (retrieval-augmented generation) tackles another key weakness—stale memory. Agents tap vector databases, legal archives, or blockchain data in real-time before reasoning. This lets them cite facts, compare historical events, and avoid hallucinations, which is critical for regulated markets.

Open standards are maturing quickly. Protocols like Model Context Protocol and tools like LangSmith will soon enable agents to share context, memory, and task state across systems. In the next 12 months, brokerages may run agent networks across trading, risk, and compliance layers with coordinated goals.

Firms that invest now—experimenting with isolated agents—will be better positioned to adopt distributed agent ecosystems later, without overhauling their core infrastructure or retraining their teams from scratch.

Conclusion

AI agents are no longer experimental. They are already executing trades, monitoring risk, and managing data pipelines across crypto and traditional finance. Yet building or buying one requires more than plugging in a model—it demands goal clarity, secure architecture, and governance discipline.

Success comes from starting small. Choose one narrow objective, like reducing slippage or surfacing fraud alerts, and deploy the agent in shadow mode first. Measure its impact, audit its decisions, and refine its scope before granting full autonomy.

FAQ

What is an AI agent?

An AI agent is autonomous software that perceives its environment, reasons about data, and executes actions to achieve specific goals without constant human input. Unlike traditional bots, AI agents can adapt, plan, and learn over time.

How is an AI agent different from a chatbot?

Chatbots are typically reactive, following predefined scripts to respond to user inputs. In contrast, AI agents are proactive and capable of initiating actions, making decisions, and learning from interactions to improve performance.

What are AI agents in crypto?

In the cryptocurrency space, AI agents autonomously analyze market data, execute trades, and manage portfolios. They operate continuously, adapting to market changes.

What is an AI agent builder?

An AI agent builder is a tool or platform that enables users to create, configure, and deploy AI agents. These builders often provide interfaces for defining agent behaviors, integrating data sources, and setting operational parameters.