What is Data Tokenization, and How Does it Protect Your Sensitive Information?

Nov 20, 2024

The modern, information-saturated environment raises the danger of misuse of this information, the prevention of which has become one of the main challenges for people of the 21st century. Today, no business can function without databases; therefore, ensuring the safety and security of this information has become the task of any responsible organization.

This is where data tokenization emerges as a powerful defense mechanism. Let’s delve deeper into what data tokenization is, how it works, and how it strengthens information security compared to traditional methods.

Key Takeaways

- Data tokenization is an advanced security process that converts sensitive info into meaningless tokens, protecting sensitive information from unauthorized access.

- Unlike encryption, tokenization doesn’t require decryption keys. Instead, it generates a random token value that maps to sensitive information within the tokenization system.

- Tokenization protects sensitive information like credit card numbers, Social Security numbers, and Personally Identifiable Information.

- This solution can be implemented across various applications, including payment processing, data analysis, and customer data management.

The Idea of Data Tokenization

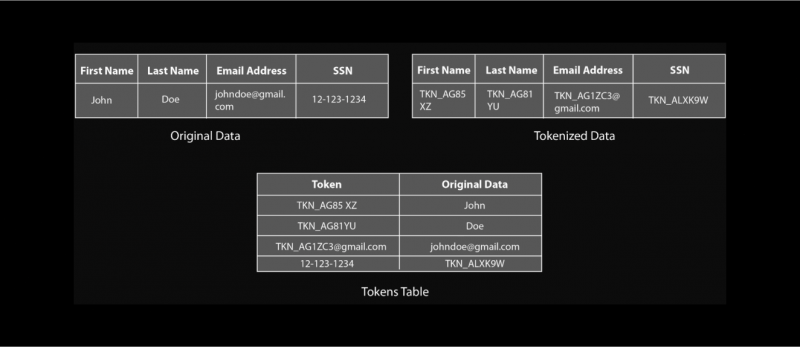

Data tokenization is a security technique that replaces sensitive details with a non-reversible, random string of characters called a token. They are randomly generated and have no mathematical relationship to the original data, making them virtually impossible to reverse-engineer. This token acts as a substitute for the original information, allowing organizations to process and utilize it without exposing the actual sensitive information.

Think of it like this: Imagine your credit card number as a house key. Data tokenization creates a unique, fake key (the token) that unlocks a specific door (representing the tokenized data) within a secure vault (the tokenization system). This fake key allows authorized systems to access the required information without ever exposing the original house key (your credit card number), ensuring enhanced security and privacy.

Businesses today handle vast amounts of sensitive information, from primary account numbers (PANs) and credit card details to bank account numbers and personally identifiable information (PII). Securing this information is crucial for data integrity and customer trust. Data tokenization offers a reliable solution to protect this sensitive information by reducing its exposure and enhancing overall data security.

Types of Tokenization

Format-preserving tokenization (FPE) is a cryptographic technique that encrypts data while preserving the original data format. This makes it ideal for tokenization because it ensures that the token can be used seamlessly in existing systems without requiring modifications.

Vault-based tokenization guarantees secure storage of original data, centralizes token management, and enhances access controls.

Vaultless tokenization, also known as format-preserving tokenization or deterministic tokenization, involves applying an algorithm to the sensitive data to generate a token that retains the same format and length as the original.

How It Works

As mentioned above, data tokenization replaces sensitive numbers with a randomized token value. This token holds no direct relationship with the original information, rendering it meaningless if intercepted. The tokenization system securely maps tokens to the original data in a secure environment, ensuring sensitive information remains protected from unauthorized access.

For example, consider credit card processing in retail or online transactions. A tokenization solution would replace the credit card number with a token during the transaction, keeping the primary account number confidential and safeguarded from potential threats.

The tokenized data can still be used within internal systems for statistical analysis and transaction processing, making it versatile without exposing sensitive information.

Fast Fact

The concept of tokenization was created in 2001 by a company called TrustCommerce for their client, Classmates.com, which needed to significantly reduce the risks involved with storing cardholder data.

Key Components of Tokenization

Data tokenization relies on several core components to generate, store, and manage tokens securely. These components work together to protect sensitive information by ensuring that tokens remain secure and that access to the original details is strictly controlled. Here are the primary elements that make up a tokenization system:

Tokenization System

The tokenization system is the heart of this process. It generates and manages the tokens, replacing sensitive data with randomly generated values. This system typically includes algorithms that create tokens in a way that makes them unique and challenging to guess.

It also enforces security policies, like access controls and authentication mechanisms, ensuring that only authorized personnel or systems can access the tokenized information. To prevent unauthorized access, the tokenization system is often housed within secure environments, either on-premises or in the cloud.

Token Vault

The token vault is a secure database that stores the mapping between tokens and their corresponding original details. This vault isolates sensitive information, so the tokens have no link to the original data unless accessed through the tokenization system.

The token vault is designed to be heavily fortified with encryption, firewalls, and other security measures, as it is the only place where the actual data-token mapping exists. Access to the vault is tightly controlled, and only users or systems with special permissions can retrieve or de-tokenize the information.

Tokenization Algorithms

These algorithms are responsible for generating tokens that replace sensitive details. These algorithms are specifically designed to ensure that tokens do not reveal any clues about the original data. Some algorithms may create random tokens, while others generate tokens based on specific patterns (for example, preserving the length of a credit card number).

The chosen algorithm depends on the use case and security requirements, but in all cases, the algorithm ensures that tokens cannot be reverse-engineered or guessed.

Access Control and Authorization

Access control is a critical component of tokenization, ensuring that only authorized users or applications can access or de-tokenize data. This control is implemented within the tokenization system and the token vault to manage permissions, enforce authentication protocols, and monitor access logs.

Authorization rules are typically set based on roles or security levels, so sensitive information is only accessible to those who need it. This component also logs each access attempt to detect any unauthorized activity.

Token Management and Lifecycle Control

Tokens may need to be updated, revoked, or reissued over time, especially if security policies or compliance requirements change. Token management encompasses the processes for handling tokens throughout their lifecycle, from creation to retirement.

For instance, if a token is no longer needed or has become compromised, it may be retired and replaced with a new token.

Lifecycle control ensures that tokens remain secure and that the tokenization system adheres to the latest security standards, reducing the risk of exposure over time.

Meaningful Benefits

Data tokenization provides several key advantages, making it an essential tool for securing sensitive information, particularly in industries where data privacy and regulatory compliance are critical. Here are some of the primary benefits:

Enhanced Security

Data tokenization significantly reduces the risk of data breaches by replacing sensitive information with meaningless tokens. Since tokens cannot be reverse-engineered without the tokenization system, even if attackers gain access to tokenized details, they cannot use it to extract the original information.

This method of isolating the actual data from other systems creates a higher level of security, especially for static, high-risk info such as payment card details and personal identifiers. By preventing unauthorized access to sensitive information, tokenization adds an extra layer of protection that can deter cyber threats and minimize potential damage in case of a breach.

Simplified Regulatory Compliance

Tokenization helps organizations meet the requirements of data privacy laws and regulations, such as the Payment Card Industry Data Security Standard (PCI-DSS), the General Data Protection Regulation (GDPR), and the California Consumer Privacy Act (CCPA). By keeping sensitive information separate from other systems and storing only tokens, companies can reduce the scope of compliance audits and the complexity of data security processes.

For example, by using tokenization for credit card information, a company can limit its exposure to PCI-DSS requirements, as the tokenized details do not fall within the same regulatory requirements as the original sensitive data.

Cost Savings and Operational Efficiency

Tokenization can help organizations save costs on data storage and security infrastructure. Since tokenized data is less sensitive than original, it can be stored in environments with fewer security requirements, reducing the need for high-cost, secure data centers.

Additionally, tokenization reduces the scope of compliance, potentially lowering audit expenses and other associated costs. This streamlined approach can also boost operational efficiency by minimizing the need for frequent security updates and resource-intensive monitoring of sensitive information.

Sharing and Collaboration

Tokenization allows for secure data sharing across departments, partners, or third-party providers without compromising the security of sensitive information. Since only tokens are shared and not the actual details, companies can collaborate or analyze data without risking exposure. For example, a healthcare organization can share tokenized patient records with research institutions while securing patients’ sensitive information.

Mitigated Risk of Insider Threats

Tokenization reduces the risk of insider threats by restricting access to sensitive data, as only those with authorization can access the original information. Since tokens do not reveal any clues about the actual details, they are meaningless if accessed by unauthorized personnel within the organization.

This can be particularly valuable in high-risk environments where access control is crucial to prevent accidental or malicious data exposure by employees, contractors, or other insiders.

Scalability for Cloud and SaaS Environments

Tokenization is highly scalable and suitable for cloud-based and Software-as-a-Service (SaaS) applications, where sensitive data is frequently stored, processed, and transmitted. Tokenization protects sensitive information while allowing it to be stored in public or hybrid cloud environments, which often come with added compliance and security concerns.

By tokenizing data before it enters the cloud, organizations can reduce risks and safely expand their operations in cloud or SaaS environments without compromising security.

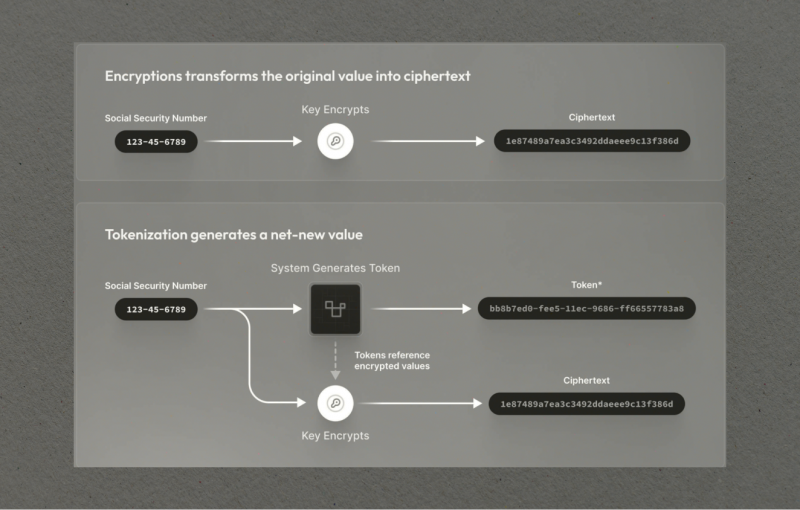

Tokenization vs. Encryption

Data tokenization and encryption are often considered similar security solutions. While both methods aim to protect sensitive info, they function in distinct ways:

- Process: Encryption transforms data into an unreadable format using a cryptographic key. Decryption requires the same key to access the original data. Tokenization, on the other hand, replaces sensitive details with a random, non-reversible token.

- Reversibility: Encrypted data can be decrypted with the correct key, allowing access to the original information. Tokens, on the other hand, are not reversible. The original info can only be accessed through the secure token vault.

- Data Format: Encryption typically alters the data format, potentially impacting its usability in specific applications. Tokenization maintains the original format, allowing seamless integration with various systems.

Tokenization is best suited for cases where sensitive data, like credit card information, personally identifiable information (PII), or healthcare details, needs to be stored but not frequently accessed. It is ideal for systems with high data isolation and security.

Encryption is more appropriate for scenarios where information needs to be accessible or transferred securely, such as in communications, file storage, and collaborative work. Encryption allows quick access to data when required, making it a better fit for dynamic environments.

While both methods can often be used together for layered security, tokenization provides better protection for static, high-risk data, while encryption suits cases requiring frequent access and processing.

Establishing Data Tokenization Solutions

Organizations must select the right solution when implementing tokenization to ensure it meets their specific security needs. This includes choosing systems that can handle token generation, data mapping, and secure storage while adhering to privacy regulations.

To effectively implement data tokenization, organizations should consider the following best practices:

- Identify all sensitive data elements that require protection.

- Choose a reliable and secure tokenization solution that meets your organization’s needs.

- Implement a robust token vault to store the original sensitive data and token mappings securely.

- Enforce strict access controls to limit token vault and tokenization system access.

- Conduct regular security audits to identify and address potential vulnerabilities.

- Train employees on data security best practices and the importance of protecting sensitive information.

Expected Challenges

While data tokenization offers substantial security benefits, it also has certain challenges and limitations. Understanding these constraints is essential for organizations considering tokenization as part of their data protection strategy.

- Complex Integration: Integrating a tokenization system across various data centers and internal systems can be complex and time-consuming.

- Maintenance Costs: Maintaining a tokenization solution and mapping tokens to original data requires consistent updates and secure storage practices.

- Integrity Issues: Ensuring tokenized data accurately maps back to the original one without errors is essential, especially for transaction processing.

- Limited Interoperability with Other Systems: Tokenized data may not be compatible with all systems or applications, as many software solutions are not designed to handle tokens instead of raw data. Integrating tokenized data into third-party applications, analytics platforms, or partner systems can be challenging, especially if these systems lack native tokenization support.

- Limited Use Cases for Dynamic: Data Tokenization is best suited for static information that does not need to be frequently modified or accessed, such as credit card numbers or PII. For dynamic data that changes often, tokenization can be less effective, as each change may require a new token to be generated, increasing complexity.

In summary, while data tokenization provides robust protection for sensitive information, it comes with specific challenges. Organizations must consider tokenization’s potential complexities, costs, and limitations before adopting it as a security measure.

Use Cases Across Industries

Data tokenization has found widespread adoption in various industries:

Payment Processing

Tokenization is a fundamental technology in the payment processing industry, where it is used to protect credit card numbers and other financial information. When a customer makes a purchase, the payment processor replaces the credit card number with a token, which is used for the transaction without exposing the actual card data. This practice helps companies comply with PCI-DSS standards, which require stringent measures for protecting payment card information.

Healthcare

In healthcare, tokenization is used to secure sensitive patient information such as medical records, insurance numbers, and personal identifiers. Tokenization helps healthcare providers comply with regulations like the Health Insurance Portability and Accountability Act (HIPAA) by ensuring that patient data is stored and transmitted securely.

E-commerce and Retail Security

E-commerce companies often handle vast amounts of customer data, including payment details, addresses, and contact information, which makes them a target for cyberattacks. Tokenization enables these businesses to protect customer data during online transactions.

For example, when customers save their payment information for future purchases, the e-commerce platform can store a tokenized version of the credit card number rather than the actual card details.

Banking and Financial Services

Tokenization is also extensively used in the banking and financial services industry to protect sensitive information, such as account numbers, loan details, and personal identifiers.

This is particularly valuable in areas like mobile banking and digital transactions, where sensitive data is frequently transmitted over networks.

Cloud Storage and SaaS Applications

Tokenization is increasingly relevant in cloud storage and SaaS applications, where organizations store and process data outside of their own secure networks. This setup allows businesses to leverage cloud computing’s scalability and flexibility without compromising data security.

Telecommunications

Telecommunications companies manage a vast amount of personal information, including customer addresses, billing details, and usage data. Tokenization allows telecom providers to use customer information for analytics and billing without exposing it to unauthorized access.

Government and Public Sector

Government agencies often handle large volumes of sensitive data, including social security numbers, tax information, and personal records. For example, when a citizen’s information is shared for administrative purposes, a tokenized version of the data can be used to protect privacy.

Insurance Industry

The insurance industry manages sensitive personal information, such as medical records, financial details, and claims history. Tokenization enables insurers to protect policyholder data while processing claims, managing policies, or sharing information with third-party providers.

Real Estate and Property Records

Tokenization is used in the real estate sector to secure property records, transaction histories, and client data. When property data is tokenized, only the token is accessible, protecting details such as ownership history and transaction amounts.

Conclusion

Data tokenization represents a crucial security measure for organizations handling sensitive information. Its ability to protect data while maintaining business utility makes it an invaluable tool in today’s digital landscape. By understanding and implementing data tokenization, organizations can protect sensitive information, meet compliance standards, and build a resilient defense against data breaches.

FAQ

What is needed to protect sensitive data?

Encryption is a fundamental component for protecting personal data. It involves converting sensitive information into a coded form, making it unreadable to anyone without the proper decryption key.

How does a data tokenization solution work?

The tokenization platform turns sensitive data into non-sensitive ones called tokens to increase data protection and reduce fraud risks in financial transactions.

What is the data tokenization process?

Tokenization, when applied to data security, is the process of substituting sensitive info with a non-sensitive equivalent, referred to as a token, that has no intrinsic or exploitable meaning or value.

What is an encryption key?

An encryption key is a variable value applied using an algorithm to a string or block of unencrypted text to produce or decrypt encrypted text.